The importance of environment perception technology in autonomous driving.

Autonomous driving is an advantageous industry in the field of intelligent travel. The perception module plays a vital role in the automatic driving system. The key technologies of autonomous vehicles mainly include the perception of the road environment, the planning of the driving path, the intelligent decision-making of vehicle motion behavior, and, the realization of adaptive motion control of the vehicle. At present, the immaturity of the development of environmental perception technology is still the main reason hindering the improvement of the overall performance of autonomous vehicles, and it is also the biggest obstacle to the large-scale commercialization of autonomous vehicles.

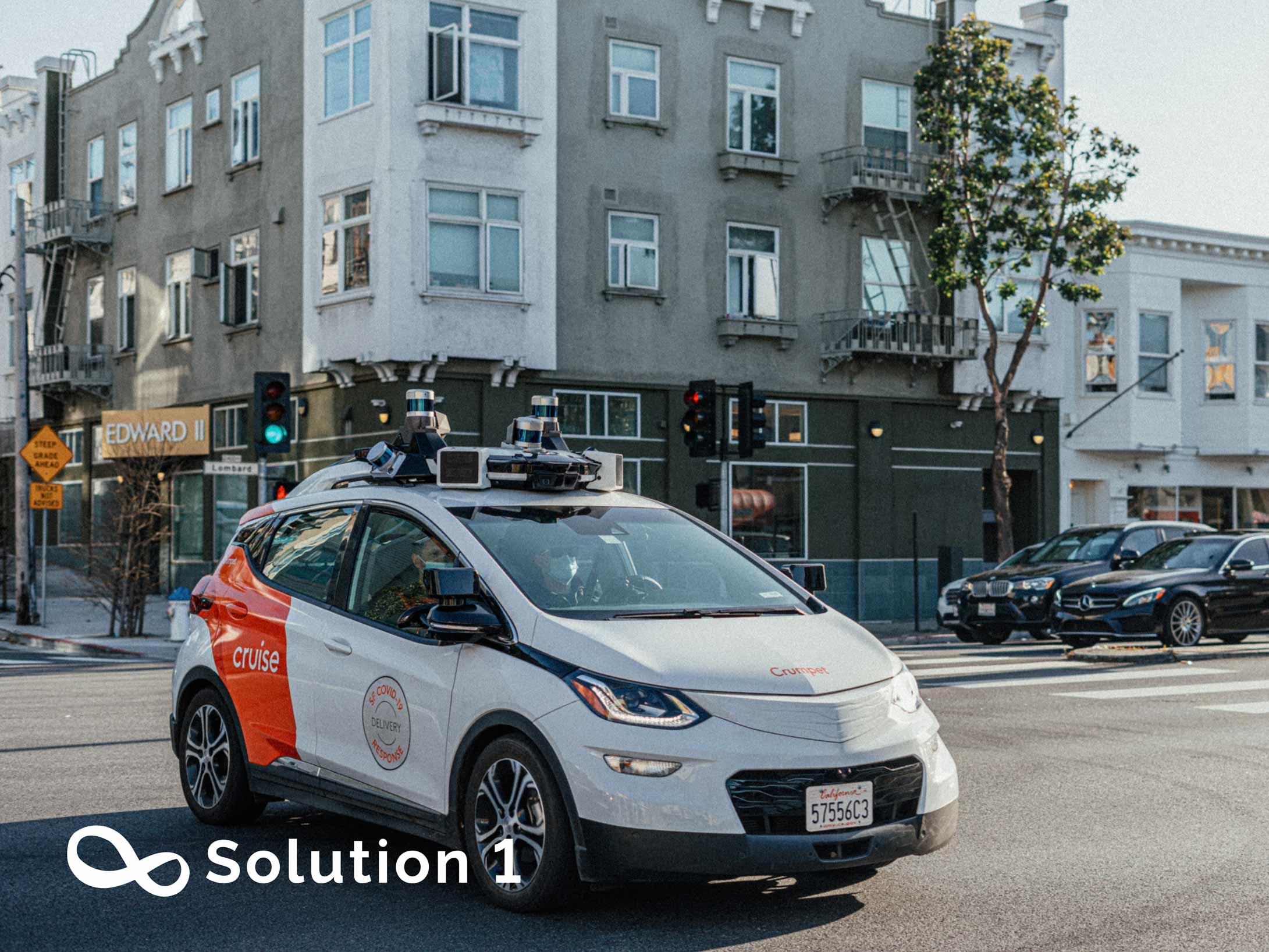

The sensors currently used in the perception module of autonomous driving mainly include cameras, millimeter-wave radar, ultrasonic radar, and LiDAR. The camera has the advantages of high resolution, fast speed, rich information transmission, and low cost; LiDAR has the advantages of accurate 3D perception and is rich in information. A variety of sensors are used in autonomous vehicles, and different types of sensors complement each other in function to improve the safety factor of the autonomous driving system. To take advantage of different sensors, fusion technology plays a key role.

There are still some gaps in the application of autonomous vehicles to practical and complex road traffic scenarios. Therefore, the environment perception system is an important part of the autonomous vehicle. The main task is to identify and classify road obstacles, traffic signs, signal lights, pedestrians and vehicles, etc., able to analyze and judge the location of the vehicle environment.

The Environment perception system is one of the key technologies of the autonomous vehicle. It is very important for the accurate understanding of traffic scene semantics and the corresponding behavior decisions of vehicles, and it is an important guarantee for driving safety and intelligence. The autonomous system includes the decision layer, perception layer, and control layer, as well as the support of high-precision maps and the Internet of Vehicles. Among them, various hardware sensors in the perception layer capture the position information of the vehicle and external environment information, and the existing onboard sensors include LiDAR, camera, millimeter-wave radar, etc.

Based on the input information from the perception layer, the decision layer models the environment, forms a global understanding, makes decisions and judgments, and sends out the signal instructions for vehicle execution. Finally, the control layer converts the signal of the decision layer into the active behavior of the vehicle.

LiDAR and cameras can detect the object alone, each sensor has its limitations. LiDAR is susceptible to severe weather such as rain, snow, and fog. Additionally, the resolution of LiDAR is quite limited compared to a camera. However, cameras are affected by light, detection distance, and other factors. Therefore, two kinds of sensors need to work together to complete the object detection task in a complex and changeable trac environment. Object detection methods based on the fusion of camera and LiDAR can usually be divided into early fusion (data-level fusion, feature-level fusion) and decision-level fusion (late fusion) according to the different stages of fusion.

The use of multiple sensors will greatly increase the amount of information that needs to be processed, and there may even be conflicting information. So, it is very critical to ensure that the system quickly processes data, filter useless and wrong information, and makes timely and correct decisions. It can be seen from the above analysis that multi-sensor fusion is not difficult to achieve at the hardware level, but it is more complex in the algorithm. Multi-sensor fusion software and hardware are difficult to separate, so it will be a difficult task in the future.

The latest research in autonomous driving shows a significant advancement in perception systems, which includes the use of AI and machine learning algorithms to enable the car to interpret and understand complex traffic scenarios. The autonomous driving systems will also include advanced mapping technology and sensors to improve operation in different weather and lighting conditions. Moreover, it has been found that the integration of V2X communication technology could enable self-driving cars to communicate with other cars, road infrastructure, and pedestrians, thus improving safety and reducing the potential for accidents. In summary, there have been several promising developments in the field of autonomous driving, and researchers are continuously working towards achieving a fully autonomous driving system that could revolutionize the transportation industry.